About 450 years ago Galileo had questioned "What mysterious structures and mechanisms work inside the human mind which creates music?" - A question which still remains mostly unanswered... But, don't worry and try to remain unbiased! Artificial intelligent will not 'wipe out' the artist nor the arts. Machine Learning will not make better music or art. For a decade plus, artificial intelligence has been waving it's presence at the horizon of emerging music technology. A presence which is now about to impact our creativity, skills, production and consequently the music. The concept of 'Machine Learning' has followed suit to harness all the supposed 'intelligence' gathered (assimilated) by super-computers. Virtually anything is mapped and codified. Many would simply say 'so what?' One cannot codify style or digitally mimic natural nuances (and anomalies). Yet persistent research and development in the field of computer based music has yielded results quite contrary to common beliefs. Code is hitting away at the bias! The feature is investigating the current tides in artificial intelligence related to music and dare we speculate about whats coming up on the horizon!

Learning is Artificial

Institutions looping inside decades of hegemony, have deeply held unto the notion that 17th, 18th and 19th-century western classical music was 'the' "golden period" of musical evolution - witness to incredibly talented composers like Bach, Mozart, Beethoven, Chopin, Wagner etc. A period often pegged as "a time of unparalleled progress and formation of complex music". In hindsight the narrative comes across as an example of white historicism mixed with chauvinism. From Plato to Alexander to Napoleon to Wagner, culture based on historicism perpetuates iconically, centuries on. Hence, a large part of the music (and the learning of) remains accessible only inside such institutions and the chosen 'masters'. However that overarching gap (and bias) is being dismantled and heckled by computer science and AI. UCLA Santa Cruz has been a pioneering ground for deconstruction of western classical music, aimed to create programs using AI. To compose classical music without musicians. J.S. Bach versus Microchips! Circa 1995 at UCLA, composer, author and professor David Cope began to write code and basic models which would learn, assimilate and reproduce western classical music. Specifically the vast tomes of J.S.Bach. Cope's aim was to produce new compositions which would sound (and feel) like pieces written by the classical icons. Professor Cope eventually created Emmi 'Experiments in Music Intelligence'. A program that would learn, compile and generate classical music pieces. The results were shocking! A legacy could be re-created by code? Some composers in shock, some denouncing it as mimicry, and some even running off to signal the 'end of humanities in music'. However all these slapdash reactions are mostly inconsequential in the trajectory of progress, now based on smart computing and AI.

"Initially I wanted to write a program which could learn and assimilate famous classical pieces. Sometime later the program was revamped to generate notes, chords, intervals and spaces for various instruments of an imaginary orchestra. The program literally learned thousands of Bach compositions, short and long. Over time and endless redactions, it was possible to generate a convincing 'Bach-Corale' or a 'Schubert in C-minor' or even build my own 'Cope Model'. Consequently I realised that this method of composition was a whole new frontier, almost like a rabbit hole, and that computer based composers could eventually use AI for making all kinds of music..." explains professor Cope. He released his first 'artificially intelligent' album titled 'From Darkness, Light (Emily Howell)' released on Centaur Records. To manifest this form of composition, it took almost a decade from concept to possibility to tangible outcome. In 2005 Emmi was retired. The data-bases were destroyed and professor Cope moved into further challenging areas, however still dealing with AI and music composition. Cope is also co-founder and CTO of Recombinant Inc, a music technology company.

Learning Machine

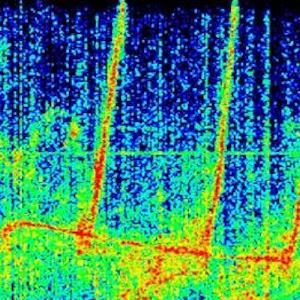

"The computer mimics the human brain, but in a primitive way as of now. Hence AI and machine learning is somewhat similar to the cognitive skill formation of babies - which is always assimilating the useful and rejecting the extraneous. The computer is memorising tunes thousands of times faster than an adult musician. This piece of code inside a computer, however brilliant, has no active reasoning nor value judgement. It's growth and potential is always dependent on it's author. That vital capacity to ascertain value is yet to come and seems improbable that it will happen anytime soon...." states Miller Puckette (Writer and theorist). In a nutshell Machine Learning is usually broken into three steps. Firstly 'deconstruction' (analysis and separation of parts) - Secondly, signatures (commonality - example how is Mozart different from Chopin?) - Lastly, compatibility ('recombinancy' - ability to recombine into new works). A cognitive process which is trying to mimic pretty much how human neural networks function. How a musician absorbs and assimilates musical knowledge, yet without a heartbeat, without hormones, fatigue, cultural bias and even 'writers block'. So is it actually music? You hear and decide...

Rewards In The System

Our minds need 'little rewards' from time to time, as we pursue our creativity. Rewards not as praise or trophies, but rather as self-reinforcing gifts that are vital to maintain objective focus. Cognitive skills require external response and stimuli, without which there would be no tangible outcome. Be it a piece of art, music, poetry or even weaving a basket or building a sand-castle, cognitive skills are at play. Currently machine learning models in music also use similar 'reward systems' working within a given code. Defined as 'Reinforcement Learning', the machine learning technique which teaches a software (call it agent AI) to decide what action to take next in order to reach the desired objectives - steps which are reinforced by maximizing "cumulative rewards". Unlike supervised learning (student/teacher), reinforcement learning does not require labelled inputs and outputs of data. But we value the output as a listener. This allows the AI to “find its own way” around the data and improve its performance. But the program is not listening, you are. The "improve" bit being a highly debated part of the subject between the developers, post-modern philosophers and AI critics. If there is any ‘soul’ in music, it is most often found in the relationship between the music, the performers and the listener. Plus the given cultural conditions and our personal emotional responses to the music. So how does it really matter if the piece of music your listening to (and not looking at) was created by a real human being playing a real instrument or a virtual composer using many invisible channels?

The music is not real?!

"While most industry experts see the changes computers brought to production and performance as the more impressive innovations, computers as composers offers incredible possibilities that are only now beginning to come to light." states Professor David Cope, now considered a pioneer in AI based composition. Wonder why so few classical musicians embraced this brilliant new tool. For the lack of losing their jobs and or credibility? Or is it just not good enough yet? We humans, engaged as factory workers or talented musicians do behave very similarly when it comes to emerging impact of AI. A mainstream TV feature exalts Emmi as"Bringing Bach and Mozart back to life" - as the jolly narrator begins to speculate the return of the golden age of classical music. However that is not going happen. Code is not bringing anything back to 'life'. It's us human beings who have to decide wether 'Frankenstein' is making a come-back or not. Instead Emmi and all such research is breaking some barriers, of formal institutional knowledge and hierarchy. AI's presence in music is not about replacing human beings, rather creating new possibilities and opening up access to music knowledge."Computers have no soul. The music is not real... something we all have heard as artists at some point. The vilification of emerging electronic music in the late 80's by some rock and jazz musicians did not matter eventually. Today the same bias is hitting up, as AI and machine learning is disrupting conventional norms and practices. AI and machine learning is set to enter consumer-level music software as we speak" states Carmen Molina studying computer based composition at the Ramon Llull, University of Barcelona.

Music is a language and it's formation has taken place over thousands of years. A language that is witness to constant change and evolution, also made of mistakes, re-calibration, revision and even the occasional 'revolution'. Mozart and Beethoven made mistakes while composing, so did the Beatles or Charlie Parker or any talented and trained musician, and so is the case with current models of AI and machine learning in music. However crude, yet as legitimate as a guitar or piano or synthesizer. At a nascent stage as of now, AI and machine learning is bound to play an increasing role (and influence) on future music. On how music will be composed, produced and consumed in the future. Questions of 'soul' and 'feel' and 'talent' have already become redundant given the overwhelming presence of technology (and computation) in music. After all 'Masterpieces' are merely group preferences, and those too change over time. Pop music is all but digital. Like it or hate it, the singer will be eventually codified as well. Today we have Emmi generating western classical music, tomorrow we will have more sophisticated and user defined models to generate, compile and mix various kinds of music and sound for various environments. Bands may be made of 'talented robots'! AI will manifest as mega DJs? Genres would be codified. Perhaps even legendary rockers? Could you as a music producer extend into a program? Now come on ! Bach or Mozart could not possibly imagine Ableton or Logic or Emmi, just as popular composers in the 50's and 60's could not imagine synthesis and automation, just as today we cannot objectively predict the path that music composition and production will take in the future. Composition, production and labour might become AI. Spirit, imagination and creativity will remain human ...

5000 works by EMMI available as Free MIDI files

Our profound thanks to the 'cross'-eyed pianist' for the inspiration and information.

Learning is Artificial

Institutions looping inside decades of hegemony, have deeply held unto the notion that 17th, 18th and 19th-century western classical music was 'the' "golden period" of musical evolution - witness to incredibly talented composers like Bach, Mozart, Beethoven, Chopin, Wagner etc. A period often pegged as "a time of unparalleled progress and formation of complex music". In hindsight the narrative comes across as an example of white historicism mixed with chauvinism. From Plato to Alexander to Napoleon to Wagner, culture based on historicism perpetuates iconically, centuries on. Hence, a large part of the music (and the learning of) remains accessible only inside such institutions and the chosen 'masters'. However that overarching gap (and bias) is being dismantled and heckled by computer science and AI. UCLA Santa Cruz has been a pioneering ground for deconstruction of western classical music, aimed to create programs using AI. To compose classical music without musicians. J.S. Bach versus Microchips! Circa 1995 at UCLA, composer, author and professor David Cope began to write code and basic models which would learn, assimilate and reproduce western classical music. Specifically the vast tomes of J.S.Bach. Cope's aim was to produce new compositions which would sound (and feel) like pieces written by the classical icons. Professor Cope eventually created Emmi 'Experiments in Music Intelligence'. A program that would learn, compile and generate classical music pieces. The results were shocking! A legacy could be re-created by code? Some composers in shock, some denouncing it as mimicry, and some even running off to signal the 'end of humanities in music'. However all these slapdash reactions are mostly inconsequential in the trajectory of progress, now based on smart computing and AI.

"Initially I wanted to write a program which could learn and assimilate famous classical pieces. Sometime later the program was revamped to generate notes, chords, intervals and spaces for various instruments of an imaginary orchestra. The program literally learned thousands of Bach compositions, short and long. Over time and endless redactions, it was possible to generate a convincing 'Bach-Corale' or a 'Schubert in C-minor' or even build my own 'Cope Model'. Consequently I realised that this method of composition was a whole new frontier, almost like a rabbit hole, and that computer based composers could eventually use AI for making all kinds of music..." explains professor Cope. He released his first 'artificially intelligent' album titled 'From Darkness, Light (Emily Howell)' released on Centaur Records. To manifest this form of composition, it took almost a decade from concept to possibility to tangible outcome. In 2005 Emmi was retired. The data-bases were destroyed and professor Cope moved into further challenging areas, however still dealing with AI and music composition. Cope is also co-founder and CTO of Recombinant Inc, a music technology company.

Learning Machine

"The computer mimics the human brain, but in a primitive way as of now. Hence AI and machine learning is somewhat similar to the cognitive skill formation of babies - which is always assimilating the useful and rejecting the extraneous. The computer is memorising tunes thousands of times faster than an adult musician. This piece of code inside a computer, however brilliant, has no active reasoning nor value judgement. It's growth and potential is always dependent on it's author. That vital capacity to ascertain value is yet to come and seems improbable that it will happen anytime soon...." states Miller Puckette (Writer and theorist). In a nutshell Machine Learning is usually broken into three steps. Firstly 'deconstruction' (analysis and separation of parts) - Secondly, signatures (commonality - example how is Mozart different from Chopin?) - Lastly, compatibility ('recombinancy' - ability to recombine into new works). A cognitive process which is trying to mimic pretty much how human neural networks function. How a musician absorbs and assimilates musical knowledge, yet without a heartbeat, without hormones, fatigue, cultural bias and even 'writers block'. So is it actually music? You hear and decide...

Rewards In The System

Our minds need 'little rewards' from time to time, as we pursue our creativity. Rewards not as praise or trophies, but rather as self-reinforcing gifts that are vital to maintain objective focus. Cognitive skills require external response and stimuli, without which there would be no tangible outcome. Be it a piece of art, music, poetry or even weaving a basket or building a sand-castle, cognitive skills are at play. Currently machine learning models in music also use similar 'reward systems' working within a given code. Defined as 'Reinforcement Learning', the machine learning technique which teaches a software (call it agent AI) to decide what action to take next in order to reach the desired objectives - steps which are reinforced by maximizing "cumulative rewards". Unlike supervised learning (student/teacher), reinforcement learning does not require labelled inputs and outputs of data. But we value the output as a listener. This allows the AI to “find its own way” around the data and improve its performance. But the program is not listening, you are. The "improve" bit being a highly debated part of the subject between the developers, post-modern philosophers and AI critics. If there is any ‘soul’ in music, it is most often found in the relationship between the music, the performers and the listener. Plus the given cultural conditions and our personal emotional responses to the music. So how does it really matter if the piece of music your listening to (and not looking at) was created by a real human being playing a real instrument or a virtual composer using many invisible channels?

The music is not real?!

"While most industry experts see the changes computers brought to production and performance as the more impressive innovations, computers as composers offers incredible possibilities that are only now beginning to come to light." states Professor David Cope, now considered a pioneer in AI based composition. Wonder why so few classical musicians embraced this brilliant new tool. For the lack of losing their jobs and or credibility? Or is it just not good enough yet? We humans, engaged as factory workers or talented musicians do behave very similarly when it comes to emerging impact of AI. A mainstream TV feature exalts Emmi as"Bringing Bach and Mozart back to life" - as the jolly narrator begins to speculate the return of the golden age of classical music. However that is not going happen. Code is not bringing anything back to 'life'. It's us human beings who have to decide wether 'Frankenstein' is making a come-back or not. Instead Emmi and all such research is breaking some barriers, of formal institutional knowledge and hierarchy. AI's presence in music is not about replacing human beings, rather creating new possibilities and opening up access to music knowledge."Computers have no soul. The music is not real... something we all have heard as artists at some point. The vilification of emerging electronic music in the late 80's by some rock and jazz musicians did not matter eventually. Today the same bias is hitting up, as AI and machine learning is disrupting conventional norms and practices. AI and machine learning is set to enter consumer-level music software as we speak" states Carmen Molina studying computer based composition at the Ramon Llull, University of Barcelona.

Music is a language and it's formation has taken place over thousands of years. A language that is witness to constant change and evolution, also made of mistakes, re-calibration, revision and even the occasional 'revolution'. Mozart and Beethoven made mistakes while composing, so did the Beatles or Charlie Parker or any talented and trained musician, and so is the case with current models of AI and machine learning in music. However crude, yet as legitimate as a guitar or piano or synthesizer. At a nascent stage as of now, AI and machine learning is bound to play an increasing role (and influence) on future music. On how music will be composed, produced and consumed in the future. Questions of 'soul' and 'feel' and 'talent' have already become redundant given the overwhelming presence of technology (and computation) in music. After all 'Masterpieces' are merely group preferences, and those too change over time. Pop music is all but digital. Like it or hate it, the singer will be eventually codified as well. Today we have Emmi generating western classical music, tomorrow we will have more sophisticated and user defined models to generate, compile and mix various kinds of music and sound for various environments. Bands may be made of 'talented robots'! AI will manifest as mega DJs? Genres would be codified. Perhaps even legendary rockers? Could you as a music producer extend into a program? Now come on ! Bach or Mozart could not possibly imagine Ableton or Logic or Emmi, just as popular composers in the 50's and 60's could not imagine synthesis and automation, just as today we cannot objectively predict the path that music composition and production will take in the future. Composition, production and labour might become AI. Spirit, imagination and creativity will remain human ...

5000 works by EMMI available as Free MIDI files

Our profound thanks to the 'cross'-eyed pianist' for the inspiration and information.

0 -